I am a Software Developer at Precision Analytics. I have experience working on and building data-driven applications, and enjoy …

Shiny and Docker

I recently joined Precision Analytics as a Software Developer. One of my first tasks was to implement a new feature in a large bioinformatics Shiny application. Shiny is an R-package that can be used to build web applications in R. It is especially popular for building applications related to data science because it allows for writing functionality using R, without needing to understand the web technologies involved (such as html, css, and javascript).

The first thing I did was attempt to run the application locally on my computer so that I could interactively implement the feature. But I quickly ran into an issue - the application wouldn’t run correctly. The same application ran seamlessly on my coworkers’ computers.

After some troubleshooting, we discovered the root of the problem - the application uses version 3.6.1 of R, and was therefore the version of R that I had installed. However, the build that I had installed (x64) was not compatible with my computer’s processor architecture (arm64). For more recent versions of R (4.0+), pre-built release packages are provided for newer processor architectures. Unfortunately, this did not include version 3.6.1, so I needed another solution. The reason that my coworkers had not previously ran into this issue was because their computers had the x64 architecture, which worked with the version of R built for that architecture.

I first tried installing a newer version of R (4.2.2, the latest at the time) for the correct architecture, and updated the project’s renv.lock file for the project to reference this version. However, when I attempted to run the application, it became apparent fairly quickly that the breaking changes between the major revisions of R would need to be addressed, as it caused me to have even more problems than those that I had originally encountered. Our application is large, and I am new to working with R and Shiny, so identifying and updating the code to run with the latest version of R seemed quite daunting!

While there were many different avenues we could have pursued to get things running, the issues I was facing seemed like an ideal use case for introducing Docker to our project.

Docker is a popular tool in web development that is used to containerize applications. Containers bundle application code with all of the resources that it needs to run, which can be particularly beneficial when we want to run an application that has been written or compiled for a different operating system. Some of the many benefits of using Docker are that it is portable. Applications running on Docker can run on any machine, provided that machine has the Docker engine installed. Everything that the application needs to run is included in the container, which ensures consistent behaviour across different systems.

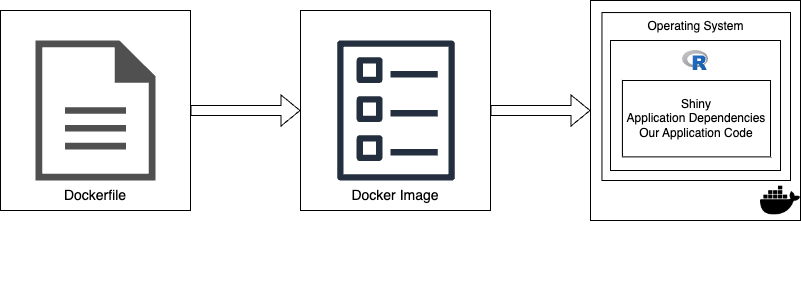

Fig 1. Docker images are text files that Docker uses to generate images. Images are templates that, when run, launch Docker containers. Our Docker container had all of the necessary resources to run our Shiny application.

Docker containers are created from a Docker image. Images are templates that specify how to create the container, and are built from Dockerfiles, which are text files containing instructions to build the image. Our container needed to include R (and version 3.6.1, specifically!). Fortunately, an image for this already exists - the Rocker Project provides a Docker image that includes the version of R that we needed. We then used this image as our base image in our Dockerfile.

We also needed our container to include the necessary resources to run a Shiny server, as well as the numerous R packages that our application is dependent on. We did this by adding lines to our Dockerfile that specified to Docker how and what to install on top of the base image (containing the OS and R). When we attempted to build the image from the Dockerfile, it was quite time consuming. Compiling and installing all of the dependencies for our project could take Docker hours! We remedied the impractically long build time by storing a copy of our image in AWS, which allowed us to access a container that already had all of the relevant dependencies included.

After generating an image containing all of our dependencies, we were able to launch a container that had all of the necessary resources to run our Shiny application. The final step was to copy the application files (i.e. the code for the application) into the container, which was done by adding a line to the Dockerfile.

With Docker, I was finally able to run our Shiny application on my computer with no errors, which enabled me to add the feature originally requested by our client!At Precision Analytics, our services include helping our clients determine the best way to deploy, host, and maintain their data science applications. Please get in touch to learn more!